How we accidentally burned through 200GB of proxy bandwidth in 6 hours

Context

Skyvern is an AI agent that helps companies automate workflows in the browser. We run leverage proxy networks and run headful browser instances in the cloud to facilitate most of our automations.

😱 200GB of proxy bandwidth was approximately $500 burned over the course of 6 hours

One fatal morning

I woke up one morning to an alarm on my phone – Skyvern's failure rate was through the roof. Classic startup moment.

Looking through our alerts, I noticed that our proxy bandwidth alert had fired off. That's weird – we just renewed our plan a few days ago, and should have enough quota to last a month.

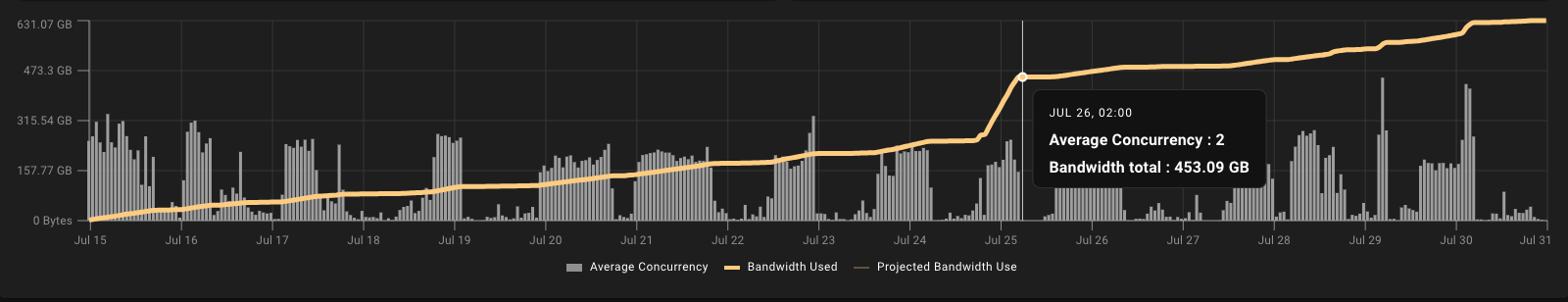

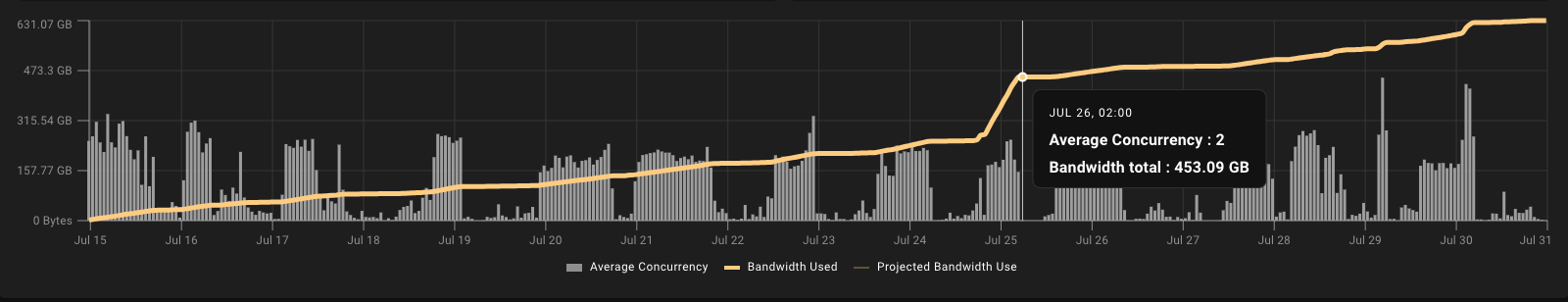

I took a look at our metrics and saw something that made my heart sink – we just burned through 200GB of proxy bandwidth in the last 6 hours. HOW DID THAT HAPPEN?

My first thought was – did we get compromised? We give all new users $5 of credits to play around with.. and have had users try to abuse it before. Did someone figure out how to create millions of accounts and burn our bandwidth?

Digging deeper

Taking a quick look at our usage stats.. no.. nothing out of the ordinary.

OK. Let's dig a little bit deeper – where is this bandwidth going?

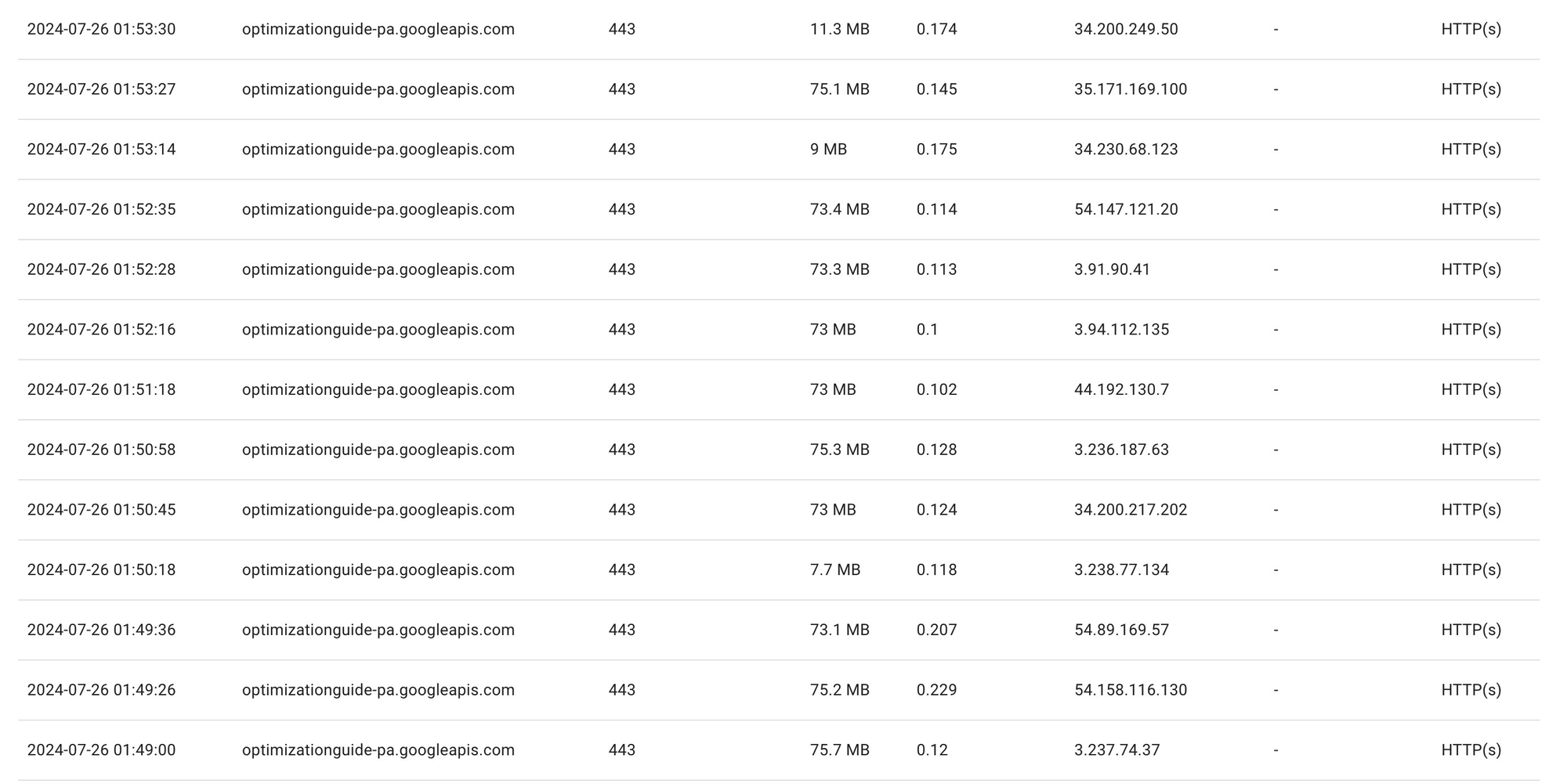

What's going on? Why is there a random call to optimizationguide-pa.googleapis.com11:05 occupying 70MB of bandwidth.. over and over and over again?

Doing a quick google search revealed this thread:

https://www.reddit.com/r/chrome/comments/truz5d/the_chrome_downloads_for_no_reason_whatsoever/

and

OK this is starting to make sense. Google's downloading a machine learning model in the background to cache, and we seem to be downloading it over and over again

We don't currently persist browser state between sessions.. did we end up in a state where google wants to cache the model, and we keep resetting to the uncached version?

Potential Solution

We have a few options to fix this:

#1 - Run chrome locally and save the locally generated user_data_dir which includes the model, effectively caching it.

Problem: if the model became stale it would re-trigger the download again in the future

#2 - Introduce a rule into our sidecar proxy to block this specific google URL – prevent Google from downloading the model in the first place

| - DOMAIN-SUFFIX,optimizationguide-pa.googleapis.com,REJECT |

Going back to sleep

We decided to do both to solve this problem. We updated our user_data_dir to get a quick fix out, and also updated our sidecar proxy to block that specific URL from triggering downloads in the future