Best Practices for Web Scraping Without Getting Banned

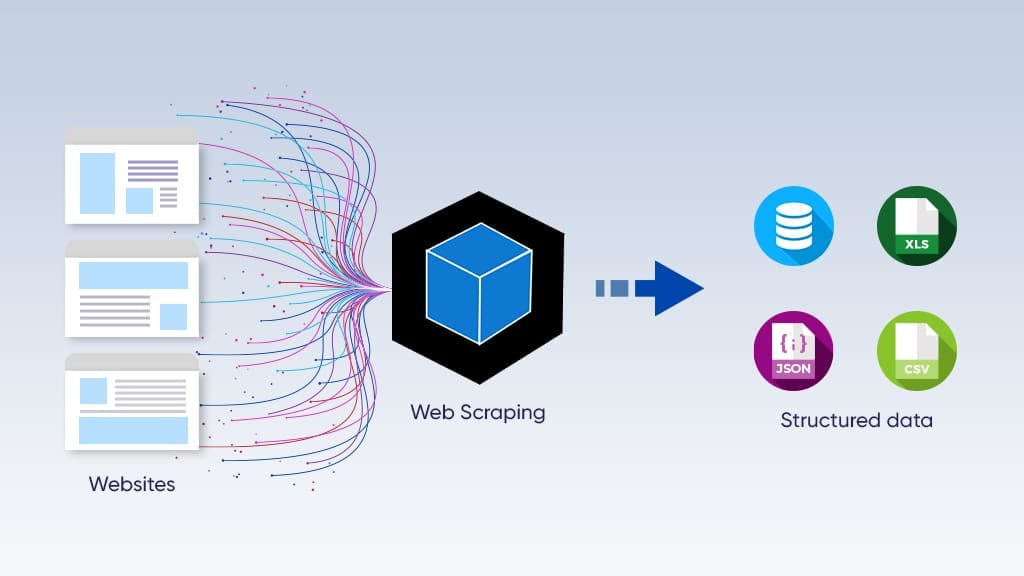

Web scraping lets users quickly collect large amounts of data from the internet. However, all web scrapers face the danger of being banned by websites that have implemented anti-bot restrictions around their data. This article explore the best practices for web scraping while avoiding bans. We will cover common anti-bot actions websites use, present strategies to lower these risks, and discuss the effective use of tools like proxies and headless browsers. Following these guidelines will give you web scrapers the necesary components to tackle the tough world of web scraping!

Common Anti-bot Measures in Web Scraping

Many websites implement anti-bot measures to block, deter, and detect scraping efforts. Understanding these challenges can help you efficiently navigate the process of building web scrapers.

- CAPTCHAs. CAPTCHA stands for Completely Automated Public Turing test to tell Computers and Humans Apart. Web scraping bots often fail to successfully solve these tests, they usually requires a human to identify or interact with distorted text or objects. Skyvern’s built-in CAPTCHA solver is designed to automatically solve various tests and avoid typical bot blocks.

- Rate limiting. This restricts how many requests can reach a server in a certain time, which prevents excessive server loads. Each website sets certain request thresholds as indicators of automated activity and exceeding these limits leads to a likelihood of temprorary or permanent blocking.

- Honeypot traps. Honeypots are aspects of pages hidden from humans but identifiable by bots. These traps might include hidden forms or links, and when bots engage with them, it triggers an alert which can lead to banning the scraper's IP.

Grasping these anti-bot measures is very important for those who wants to dive into web scraping. These systems identify and restrict bot activities, understanding their functions helps in developing better scraping strategies.

Best Practices for Web Scraping

Following guidelines for best practices can help scrapers avoid getting blocked or banned while obtaining needed information from target websites.

- Headless browsers

These browsers operate without a graphical interface but is able to programmatically interact with web pages. This is important for scraping sites with heavy JavaScript where the data is dynamically loaded. Skyvern utilizes headless browsers to handle complex page elements more effectively than traditional scraping methods.

- Management of request rate

Sending tons of requests rapidly can trigger anti-bot systems. Depending on the website, we suggest a reasonable request frequency with random pauses in between each request.

- Rotating user agents

Websites can see identifiable user agents. So, using different user agents for each request helps make scraping look more like normal user activity, which decreases flagging risk significantly.

- Keeping scraping tool up to date

Websites change their layouts and defenses often. Traditional web scrapers will need to be updated to navigate through these changes. Skyvern combines computer vision and AI to imitate human browsing, which makes it possible to adapt to dynamic web layouts.

- Error handling

Scrapers should be able to handle HTTP errors or unexpected changes. For example, retrying failed requests with random delays can sneak past temporary blocks, making your scraping setup more reliable.

- Using proxies

A single IP might easily be logged and banned. A pool of proxies spreads requests over various IPs. This strategy helps when sites have strict anti-scraping rules. Skyvern’s built-in proxy network supports country, state, or even precise zip-code level targeting.

By following these best practices for web scraping, we can confidently gather important data while reducing disruptions from anti-bot measures.

Conclusion

In conclusion, mastering web scraping takes technical skills and a good grasp of practices that prevent bans. In this blog post, we talked about anti-bot measures that sites use to guard their data and discussed strategies like using proxies and headless browsers to deal with those challenges. Follow these best practices to boost your scraping efficiency and help stay in safe limits. Remember, scraping that’s done the right way leads to sustainable data collection, so start applying these methods now and tap into the vast data available online!

About Skyvern

Skyvern is an AI-driven platform that automates browser-based workflows using large language models and computer vision, enabling users to perform tasks like form submissions and data extraction effortlessly.

With its innovative approach, Skyvern significantly reduces the fragility of traditional automation methods, adapting seamlessly to changes in website layouts, making it an essential tool for efficient online operations. Explore Skyvern today and transform your workflows!